Topological Qubits and Microsoft’s Quantum Breakthrough: How They Work and Why They Matter

In 2025, quantum computing is closer to real-world application than ever, largely thanks to Microsoft’s recent success in demonstrating a functional topological qubit. This breakthrough represents a shift from theoretical frameworks to tangible progress in the quest for scalable quantum systems. The approach not only solves some of the biggest challenges in quantum error correction but also sets a foundation for a new generation of stable, fault-tolerant quantum processors.

The Science Behind Topological Qubits

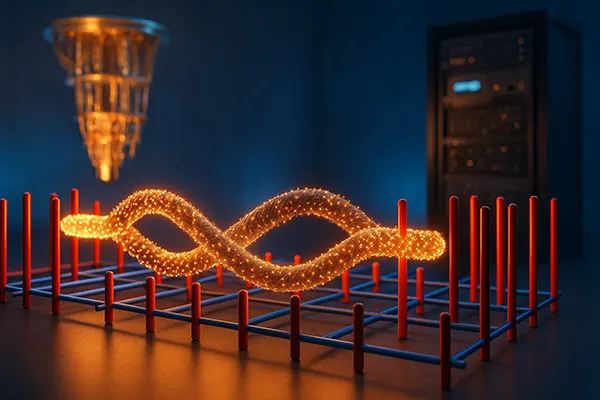

At the heart of quantum computing lies the qubit — a unit capable of representing both 0 and 1 simultaneously through superposition. However, traditional qubits are highly unstable and prone to errors caused by environmental interference. Microsoft’s research focuses on topological qubits, a unique approach that uses exotic quasiparticles known as Majorana zero modes to encode information in a way that naturally protects it from noise.

Unlike superconducting or trapped-ion qubits, topological qubits rely on the topological properties of matter. These properties allow the quantum state to be distributed across several physical locations, reducing susceptibility to local disturbances. The resulting system is inherently more robust, which could make quantum computations more reliable and easier to scale.

In 2024, Microsoft and its research partners successfully created and measured signatures of Majorana modes in semiconductor–superconductor hybrid structures. This experiment, confirmed by independent verification, provided the first concrete evidence of topological protection — an achievement considered a critical milestone for the industry.

Why This Approach Matters

The implications of Microsoft’s work extend far beyond theoretical physics. Topological qubits could enable quantum computers to perform complex calculations without requiring thousands of error-correction cycles. This would dramatically reduce both hardware costs and computational overhead, paving the way for practical quantum applications in chemistry, cryptography, and material science.

Furthermore, the topological model aligns with the concept of modular quantum architecture, allowing researchers to interconnect smaller stable units into larger, powerful systems. Such scalability is crucial for developing commercial-grade quantum processors that could eventually outperform classical supercomputers in specific problem domains.

Microsoft’s breakthrough also demonstrates the company’s long-term commitment to developing its Azure Quantum ecosystem. By focusing on a physics-based foundation rather than short-term performance, the firm is positioning itself to lead in the emerging field of quantum cloud computing by the late 2020s.

Microsoft’s Path Toward Scalable Quantum Computing

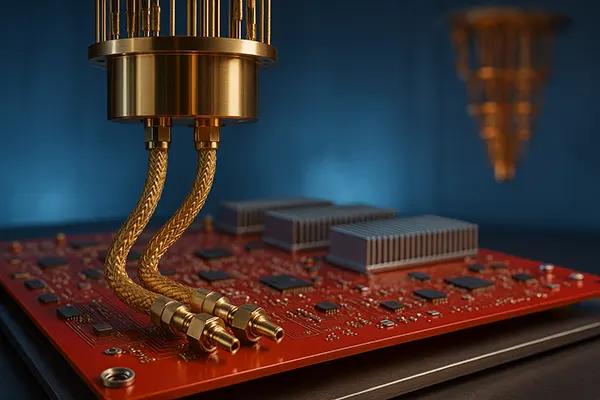

Over the last decade, Microsoft’s quantum research team has combined advanced materials science, cryogenic engineering, and software innovation to build an integrated quantum technology stack. Their approach unites hardware development, quantum algorithms, and simulation tools into one coherent framework — designed for both research and future industry deployment.

The Azure Quantum platform (now a suite of services and SDKs) enables scientists to experiment with hybrid algorithms that combine quantum and classical resources. As topological qubits mature, these tools will play an essential role in transitioning from laboratory demonstrations to full-scale quantum networks.

In 2025, the company’s roadmap includes the first prototype chip integrating multiple topological qubits into a single circuit. This prototype aims to demonstrate logical qubit operation — a key step toward building fault-tolerant machines capable of running complex algorithms beyond classical reach.

Challenges and Remaining Questions

Despite this progress, significant challenges remain. Producing stable Majorana states requires near-perfect control of material purity and interface quality. Even minor imperfections in semiconductor nanowires or superconducting layers can destroy the delicate topological state.

Moreover, scaling from a few qubits to thousands requires breakthroughs in cryogenic infrastructure and quantum interconnects. Microsoft and academic collaborators are investing heavily in nanoscale fabrication techniques and low-noise measurement systems to overcome these barriers.

Another open question is how software frameworks will adapt to the new hardware paradigm. While Microsoft’s Q# language and simulation tools are already compatible with multiple qubit types, the real test will come once topological devices begin performing full-scale computations under realistic conditions.

The Future of Topological Quantum Computing

Experts believe that topological quantum computing will define the next decade of research and development in the field. The approach’s stability advantages could make it the first viable route to practical quantum advantage — where quantum systems consistently outperform classical ones on real-world tasks.

Microsoft’s continued focus on open collaboration and verified results has also attracted attention from major scientific institutions. By sharing experimental data and methodologies, the company is helping to establish new standards for reproducibility and transparency in quantum research.

In a broader context, topological qubits could revolutionise industries dependent on computation, from secure communication to pharmaceutical discovery. The first large-scale quantum accelerators built on this principle may enable molecular modelling and cryptographic analysis previously thought impossible with conventional computing.

What Lies Ahead

As of 2025, Microsoft’s quantum programme remains in the experimental phase, but each step forward brings the technology closer to commercial viability. The next milestones will likely involve hybrid quantum–classical experiments running on partially topological systems.

Researchers anticipate that within the next five years, topological qubits will transition from single-particle demonstrations to functional qubit arrays. Such arrays could maintain coherence for extended periods, drastically reducing the error rates that currently limit quantum performance.

Ultimately, the success of Microsoft’s approach could reshape the competitive landscape of quantum computing, establishing a new paradigm that blends physics, engineering, and computation into a single, stable framework for the future of technology.